YA boards.ie thread complaining about An Post’s inability to deliver parcels : an absurd situation, and unlikely to improve. I’m surprised eBay or Amazon Ireland aren’t kicking up a fuss about this

(tags: parcels an-post ireland post delivery couriers)IRMA harrassing Irish music blogs : nialler9 says: ‘I would have posted an MP3 but IRMA asked me to take down some major label related tunes last week’

(tags: irma ireland mp3 music nialler9 blogs blogging)2030Vision.ie : ‘2030 Vision is [..] the Strategic Transport Plan being developed by the Dublin Transportation Office for the Greater Dublin Area. It will be at the heart of all transport planning in the region from 2010 until 2030. [..] We wish to consult you in the development of the new transport strategy.’ Give your $.02 here, online

(tags: 2030vision dublin ireland transport road rail cycling commute life government dto ndp)Open Source NG Databases : big thumbs up from Artur, jzawodny and others for CouchDB. interesting thread of comments from people using it for “real-world” stuff

(tags: databases couchdb db map-reduce erlang)The std deviation of accident rate among recently-qualified drivers is higher than for the more experienced : An interesting, and counter-intuitive, demo of road safety statistics from Colm

(tags: statistics road-safety ireland driving l-plates standard-deviation)

Justin's Linklog Posts

how to reset an NTL.ie cable box : press “Help” then the yellow button. very useful for when it loses BBC4, which it’s just done _again_

(tags: cable tv television ntl ireland tips reset stb set-top-box)Scaling Lucene and Solr : extremely detailed, lots of useful tips here (via mattb)

(tags: via:mattb lucene solr scaling scalability search java)TxFlash : paper on “transactional flash” — transactions implemented at the filesystem level using useful properties of SSD storage. nice research

(tags: transactions transactional storage research flash ssd via:storagemojo)Ireland not open for business, says Twitter innovator : an interview with @blaine after his relocation to Antrim. not a great article, really. in fairness, he’s obvs never had to relocate from Europe to the US; I’ve done it, and it’s as much of a bureaucratic minefield in my experience

(tags: ireland northern-ireland blaine-cook economics business)

Thanks to Pierce for pointing me at this review of an interesting-sounding book called Introduction to Information Retrieval. The book sounds quite useful, but I wanted to pick out a particularly noteworthy quote, on compression:

One benefit of compression is immediately clear. We need less disk space.

There are two more subtle benefits of compression. The first is increased use of caching … With compression, we can fit a lot more information into main memory. [For example,] instead of having to expend a disk seek when processing a query … we instead access its postings list in memory and decompress it … Increased speed owing to caching — rather than decreased space requirements — is often the prime motivator for compression.

The second more subtle advantage of compression is faster transfer data from disk to memory … We can reduce input/output (IO) time by loading a much smaller compressed posting list, even when you add on the cost of decompression. So, in most cases, the retrieval system runs faster on compressed postings lists than on uncompressed postings lists.

This is something I’ve been thinking about recently — we’re getting to the stage where CPU speed has so far outstripped disk I/O speed and network bandwidth, that pervasive compression may be worthwhile. It’s simply worth keeping data compressed for longer, since CPU is cheap. There’s certainly little point in not compressing data travelling over the internet, anyway.

On other topics, it looks equally insightful; the quoted paragraphs on Naive Bayes and feature selection algorithms are both things I learned myself, "in the field", so to speak, working on classifiers — I really should have read this book years ago I think ;)

The entire book is online here, in PDF and HTML. One to read in that copious free time…

Recently, there’s been a bit of discussion online about whether or not it makes sense for companies to host server infrastructure at Amazon EC2, or on traditional colo infrastructure. Generally, these discussions have focussed on one main selling point of EC2: its elasticity, the ability to horizontally scale the number of server instances at a moment’s notice.

If you’re in a position to gain from elasticity, that’s great. But it is still worth noting that even if you aren’t in that position, there’s another good reason to host at an EC2-like cloud; if you want to deploy another copy of the app, either from a different version-control branch (dev vs staging vs production deployments), or to run separate apps with customizations for different customers. These aren’t scaling an existing app up, they’re creating new copies of the app, and EC2 works nicely to do this.

If you can deploy a set of servers with one click from a source code branch, this is entirely viable and quite useful.

Another reason: EC2-to-S3 traffic is extremely fast and cheap compared to external-to-S3. So if you’re hosting your data on S3, EC2 is a great way to crunch on it efficiently. Update: Walter observed this too on the backend for his Twitter Mosaic service.

Elliotte Rusty Harold disses queueing : ‘More likely there’s something wrong with the whole design of network systems based on message queues, and we need to start developing alternatives.’ blimey! sounds like an atrocious implementation, or possibly AMQP itself; I’ve had great results with many (non-AMQP-based) queue systems

(tags: queueing java amqp messaging elliotte-rusty-harold)

I seem to have invented a new extreme sport on the way into work: Ice Cycling. The roads were like an ice-skating rink. Scary stuff :(

Here’s some advice for anyone in the same boat:

-

use a high gear: avoid using low gear if possible, even when starting off. Low revs mean you’re more likely to get traction.

-

try to avoid turns: keep the bike as upright as possible.

-

try to avoid braking: braking is very likely to start a skid in icy conditions.

-

use busy roads: where the ice has been melted by car traffic. In icy conditions, you should ride where the cars have been, since they’ll have melted the ice.

-

ride away from the gutters: they’re more likely to be iced over than the centre of a lane. Again, ride where the cars have been.

-

avoid road markings: it seems these were much icier than the other parts of the road; possibly because their high albedo meant the ice on them hadn’t been melted by the sun yet. So look out for that.

Here’s a good thread on cyclechat.co.uk, and don’t miss icebike.org: ‘Whether commuting to work, or just out for a romp in the woods, you arrive feeling very alive, refreshed, and surrounded with the aura of a cycling god. You will be looked upon with the smile of respect by friends and co-workers. – – – Or was that the sneer of derision…no matter, ICEBIKING is a blast!’ o-kay.

Their recommendations are pretty sane, though. ;)

amazing car-crash interview attempting to justify DRM from Microsoft : interview with MS UK’s Head Of Mobile attempting, and failing, to justify their DRM policy. Q: ‘If I buy these songs on your service – and they’re locked to my phone – what happens when I upgrade my phone in six months’ time?’ A: ‘Well, I think you know the answer to that.’

(tags: omgwtfbbq microsoft funny drm fail hugh-griffiths interviews mp3 music mobile)Ryanair’s new €30 surcharge for >1 item of hand luggage : also applies to laptops. they truly are the most customer-hostile company in the world

(tags: ryanair wankers flight airlines travel laptops customer-service consumer surcharges)Monty leaving Sun : he resigned due to the botched MySQL 5.1 release, and slowness in Sun’s actions to ‘fix our community and development problems’. regardless, he says they’re parting on good terms. His new company, Monty Program Ab, will be run by http://zak.greant.com/hacking-business-models — cool

(tags: mysql databases sun opensource acquisitions business-models monty jobs work)

ICANN loses $4.6M on the stock market : why was an important piece of internet infrastructure gambling on stocks? oh dear

(tags: via:johnlevine icann domains stocks crash money infrastructure internet)Web Hooks : well-defined semantics for callbacks over HTTP, as seen in Google Code, Amazon Checkout, PayPal’s IPN and more

(tags: http web programming notification callbacks webhooks google paypal amazon)

Facebook’s Cassandra distributed database : bookmarking this a bit late, but going by the Google Code site it seems to be taking off quite nicely, and heading for the Apache Incubator

(tags: cassandra facebook java scalability p2p papers dynamo bigtable hbase database data-store distributed data storage)explaining Python 2.5’s “with” keyword (PEP-343) : RAII syntactic sugar. I like

(tags: raii python with context-managers coding scope peps)joint press release from Eircom and IFPI re the “3 strikes” case : ‘The record companies have agreed that they will take all necessary steps to put similar agreements in place with all other ISPs in Ireland.’

(tags: isps ireland eircom ifpi irma emi piracy filesharing bittorrent)very odd comment from an Airtricity spokesman regarding their recent customer-data leak : ‘Airtricity has not taken legal action against any bloggers in relation to information posted about this incident.’ well, that’s good of them! wtf

(tags: omgwtfbbq airtricity cluetrain pr leaks privacy legal ireland blogging via:damienmulley)An Interview With Adam Olsen, Author of Safe Threading : safethread is a nice patch for Python 3000 implementing a new approach to concurrency in the Python internals. I like the “branching-as-children” approach to threading, using variable scope to enforce the thread lifecycle

(tags: threading safethread python python3000 programming concurrency branching-as-children scoping)

Colm’s top productivity tip : leave it broken. hmm!

(tags: programming advice productivity coding)

good Bloom filter implementation advice : particularly for Java; reportedly a few of the open-source Bloom filter impls use poor choices of hash algorithm

(tags: hashing bloom-filters algorithms java programming)Will Proof-of-Work Die a Green Death? : ‘The last thing we need is to deploy a system designed to burn all available cycles, consuming electricity and generating [CO2…] in order to produce small amounts of bitbux to get emails or spams through.’ Good point

(tags: proof-of-work hashcash anti-spam green environment john-gilmore via:emergentchaos security)

Wow. The IFPI’s strategy of "divide and conquer" by taking individual ISPs to court to force them to institute a 3 strikes policy, as successfully deployed against Eircom this week, is possibly marginally better than this insane obsolete-business-model handout proposed by the UK government in their Digital Britain report:

Lord Carter of Barnes, the Communications Minister, will propose the creation of a quango, paid for by a charge that could amount to £20 a year per broadband connection.

The agency would act as a broker between music and film companies and internet service providers (ISPs). It would provide data about serial copyright-breakers to music and film companies if they obtained a court order. It would be paid for by a levy on ISPs, who inevitably would pass the cost on to consumers.

Jeremy Hunt, the Shadow Culture Secretary, said: “A new quango and additional taxes seem a bizarre way to stimulate investment in the digital economy. We have a communications regulator; why, when times are tough, should business have to fund another one?”

Well said. An incredibly bad idea.

By the way, I’ve noticed some misconceptions about the Eircom settlement. Telcos selling Eircom bitstream DSL (ie. the 2MB or 3MB DSL packages) are immune right now.

They are, however, next on the music industry’s hit-list, reportedly…

Joey Hess on his Google Summer of Code experiences : it didn’t work out so well for him. I have to concur to some degree; in my experience, GSOC mentoring is hard work

(tags: google summer-of-code gsoc coding software open-source spamassassin debian joey-hess ikiwiki)Measurement Lab : new PlanetLab/Google collaboration with various web-based tools to measure your internet connection — including a handy test to see if your ISP slows down BitTorrent

(tags: google networking bittorrent performance bandwidth p2p speed network measurement analysis metrics isp netneutrality)

Eircom has been forced to implement "3 strikes and you’re out", according to Adrian Weckler:

If the music labels come to it with IP addresses that they have identified as illegal file-sharers, Eircom will, in its own words:

"1) inform its broadband subscribers that the subscribers IP address has been detected infringing copyright and

"2) warn the subscriber that unless the infringement ceases the subscriber will be disconnected and

"3) in default of compliance by the subscriber with the warning it will disconnect the subscriber."

My thoughts — it’s technically better than installing Audible Magic appliances to filter all outbound and inbound traffic, at least.

However, there’s no indication of the degree to which Eircom will verify the "proof" provided by the music labels, or that there’s any penalty for the labels when they accuse your laser printer of filesharing. I foresee a lot of false positives.

Update: LINX reports that the investigative company used will be Dtecnet, a ‘company that identifies copyright infringers by participating in P2P file-sharing networks’. TorrentFreak says:

DtecNet […] stems from the anti-piracy lobby group Antipiratgruppen, which represents the music and movie industry in Denmark. There are more direct ties to the music industry though. Kristian Lakkegaard, one of DtecNet’s employees, used to work for the RIAA’s global partner, IFPI. […]

Just like most (if not all) anti-piracy outfits, they simply work from a list of titles their client wishes to protect and then hunts through known file-sharing networks to find them, in order to track the IP addresses of alleged infringers.

Their software appears as a normal client in, for example, BitTorrent swarms, while collecting IP addresses, file names and the unique hash values associated with the files. All this information is filtered in order to present the allegations to the appropriate ISP, in order that they can send off a letter admonishing their own customer, in line with their commitments under the MoU.

[…] it will be a big surprise if [Dtecnet’s evidence is] of a greater ‘quality’ than the data provided by MediaSentry.

More coverage of the issues raised by the RIAA’s international lobbying for the 3-strikes penalty:

Some Things Need To Change : Mike Arrington finds himself victimised by crazies — ‘I write about technology startups and news. In any sane world that shouldn’t make me someone who has to deal with death threats and being spat on. It shouldn’t require me to absorb more verbal abuse than a human being can realistically deal with.’ holy crap

(tags: wtf technology techcrunch life blog michael-arrington startups crazy)alternative Flickr photostream for my photos : via “I Hardly Know Her”, a sister site to muxtape.com. great UI for viewing a set of Flickr photos

(tags: flickr photos muxtape ui web pictures)pgTAP: Unit Testing for PostgreSQL : ‘Unit Testing for PostgreSQL’. application of the perl-style TAP unit test protocol for testing an SQL database, good idea

(tags: unit-tests testing sql postgres database postgresql tap perl)Updated: A Year Later, AOL Is Contemplating A Bebo Sale : fair dues to Bebo for pulling the wool over the eyes of the ad agencies, if this account is true. suckers!

(tags: media advertising business bebo acquisitions aol recession marketing london suckers)

Linus Switches From KDE to Gnome : +1, I’ve basically done this, too (apart from my JuK-based music system). good thread of comments, too; everyone pretty much agrees that KDE made a mess of the KDE4 release :(

(tags: kde kde4 slashdot gnome linux linus-torvalds desktops ui software-quality releases)

‘Megaupload auto-fill captcha’ Userscript : omg this is brilliant — a Greasemonkey user-script containing a neural network, *in Javascript*, to solve MegaUpload’s CAPTCHAs in your browser. this may be the coolest userscript ever

(tags: userscripts neural-networks javascript cool programming greasemonkey ai captcha via:reddit omg)behind Masal Bugduv : The Times got pranked by an anonymous Irishman

(tags: masal-bugduv football funny the-times journalism via:bwalsh ireland irish gaeilge m-asal-beag-dubh)SarahLacy.com: Google Dethroned? : is Google losing its knack? Twitter/Facebook more popular than Google during the inauguration; Hulu provide a better search experience than YouTube (although not so much for non-USians). interesting theory

(tags: google youtube hulu twitter facebook companies via:mattcutts)

intro to RabbitMQ and py-amqplib : nice and concise, with real-world deployment data. I wish this had been around when I was evaluating AMQP systems in PutPlace

(tags: python mq erlang amqp rabbitmq messaging queue introduction py-amqplib)

TechWire: How to approach a newspaper interview : really great advice for tech-section interviewees from Adrian Weckler

(tags: advice interviews newspapers journalism adrian-weckler tips pr)best reddit comments thread ever : re ‘X# – XML oriented programming language; the foundation of an open source Enterprise Mashup Server, CRM and Groupware Suite’ — ‘When you do a mashup, you’re supposed to get your peanut butter in my chocolate, not lodge your fork in my goddamned eye socket’

(tags: mashups funny xsharp omgwtfbbq xml java hilarity reddit via:damienkatz)xpra : ‘screen for remote X apps.’ sounds better-maintained and more usable than xmove or NX, to boot (via adulau)

(tags: via:adulau unix x11 desktop gui vnc ssh screen windows xpra)Solvent : ‘a Firefox extension that helps you write screen scrapers for [semweb scraping platform] Piggy Bank.’ Nice idea — hook directly into the browser to specify scraping rules.

(tags: web firefox javascript scraping programming extensions xpath rdf browser semweb solvent)I Am Here: One Man’s Experiment With the Location-Aware Lifestyle : great article by @mat on the benefits — and dangers — of pervasive geotagging

(tags: geotagging location mapping privacy twitter iphone mobile wired gps mobile-computing mat-honan via:waxy)

Google AppEngine abuse : possibly the first AppEngine site run by spammers — a fake storefront, reportedly

(tags: google appengine abuse web spam scams)TweetBackup : free Twitter backup site; nicely done. runs daily; doesn’t need your Twitter password; and exports to text and HTML

(tags: twitter backup tweets web microblogging)Anti-RDBMS: A list of distributed key-value stores : exhaustive, with great comments from many of the implementors

(tags: storage databases sca scalability memcached architecture aws performance simpledb couchdb dht voldemort scalaris hypertable hbase dynamo)Green party says sorry for e-mail gaffe – Times Online : wow, the “communications manager” for the Green Party is an unrepentant asshat. ‘some bloggers have developed their own set of rules about how they should be approached and the e-mail in question fell foul of these rules.’ Nice non-apology there! Entirely wrong — Unsolicited Bulk Email is spam, even if you’re a politician

(tags: politics ireland spam email greens ube)Irish Torrents : ‘Torrents of RTE, TV3, TG4 content’. a great collection of Irish TV programmes — unclear how well they’re seeded, or their rip quality, though

(tags: irish torrents bittorrent tv television ireland rte tv3 tg4)

I’ve switched my home broadband from Eircom’s 3Mbps all-in-one package to Magnet’s 10Mbps LLU package. It’s about a tenner a month cheaper, and significantly faster of course.

The modem arrived last Friday, about 2 weeks after ordering; that night, when I went to check my mail, I noticed that the DSL had gone down, and indeed so had the phone. I was dreading a weekend without the interwebs, it being 9pm on Friday night — but lo, when I plugged in the Magnet router, it all came up perfectly first time!

Great instructions too. Extremely readable and quite comprehensible for a reasonably non-techie person, I’d reckon. So far, they’ve provided great service, too.

I’m not actually getting the full 10Mbps, unfortunately; it’s RADSL, and I’m only getting 5Mbps when I test it. Just as well I didn’t pay the extra tenner to get their 24Mbps package. Still, that’s a hell of a lot faster than the sub-1Mbps speeds I’ve been getting from Eircom.

It’s hard to notice an effective difference when browsing though, as that kind of traffic is dominated by latency effects rather than throughput.

I haven’t even tried their "PCTV" digital TV system; it seems a bit pointless really, I have a networked PVR already, and anyway I doubt they support Linux.

One thing that’s wierd; when my wife attempts to view video on news.bbc.co.uk on her Mac running Firefox, it stalls with the spinny "loading video" image, and the status line claims that it’s downloading from "ad.doubleclick.net". This worked fine (of course) on Eircom. If I switch to my user account and use Firefox there, it works fine, too — possible difference being that I’m using AdBlock Plus and she’s not. Something to do with the number of simultaneous TCP connections to multiple hosts, maybe? Very odd anyway. It’d be nice to get some time to sit down with tcpdump and figure this one out… any suggestions?

Amazon.com: Your Associate Website Browsing Settings : ‘We keep a record of your visits to Amazon Associate web pages that have Amazon.com content links on them. Among other things, we use this information to better personalize your web experience and improve our Amazon services.’ uh, *no*

(tags: amazon for:jkeyes opt-out ads advertising privacy personalization)Irish e-tailers’ returns policy : Article by Adrian Weckler — Komplett’s returns policy sounds pretty good in particular. and ~50% of complaints at the European Consumer Centre are relating to delivery problems — that’s shocking :(

(tags: delivery couriers shopping adrian-weckler komplett elara sbp returns retailers consumer)Capistrano: From the Beginning : wow, the Rails community have really reinvented a lot of wheels ;)

(tags: capistrano rails deploy sysadmin ruby management)Why Google Employees Quit : massive leak of gripe mails from ex-Googlers about why they left the company

(tags: google techcrunch staff employment satisfaction workplace myths recession jobs management culture hr)A Fistful Of Dollars: The Story Of A Kiva.org Loan : ‘I decided it would be great to try and follow one [Kiva.org microloan] through the system from start to finish, for the benefit of my colleagues who I coaxed into making a loan, and for myself, and for anyone else who is interested.’

(tags: kiva loans microloans charity money video towatch)

Popular Chinese Filtering Circumvention Tools DynaWeb FreeGate, GPass, and FirePhoenix Sell User Data : well, that’s a business model I suppose

(tags: privacy anonymity china security surveillance filtering censorship gpass gfw firephoenix database circumvention internet proxies)

UK ISPs blocking archive.org : due to another overbroad IWF block

(tags: iwf censorship isps uk archive.org the-register)behind the ‘interview with an adware author’ story : paperghost strongly doubting that Matt Knox was quite so squeaky-clean as his recent interview would suggest

(tags: paperghost security malware adware blackhat nasty interviews)HDFS Reliability : short paper from Tom White with post-mortems of some common failures of HDFS in the field, and some best practices to avoid them

(tags: best-practices hadoop hdfs dfs cloud-computing reliability software storage java tom-white bugs)Ross Anderson’s anti-botnet idea : ‘if you complain to abuse@ somebody or other dot com, and more than three hours after that, you get more phish or spam from the same infected machine, then you should have a legal right to claim €10 from them. No need to prove malice, no need to prove actual damage, just “here’s the bill”. A similar scheme has largely sorted out late flights, cancellations and overbookings among cheap airlines in Europe, because now you get €250 [if] EasyJet or Ryanair bump you off the flight to Barcelona.’

(tags: anti-spam ross-anderson via:derek botnets security isps abuse)Dual Pricing.IE : exposing the rip-off pricing of products sold in both Ireland and the UK, with much more expensive prices in .ie. Boots Sanex deodorant is the current worst offender, at UKP0.99 vs EUR2.99

(tags: ireland shopping comparison via:mulley uk pounds euro rip-off consumer)Spam Botnets to Watch in 2009 – Research – SecureWorks : interesting to note that spam rates from botnets unaffected by the McColo shutdown went up during that time, indicating that the spammers are simply renting botnet time and their spam is relatively portable to other engines

(tags: anti-spam botnets secureworks 2009)

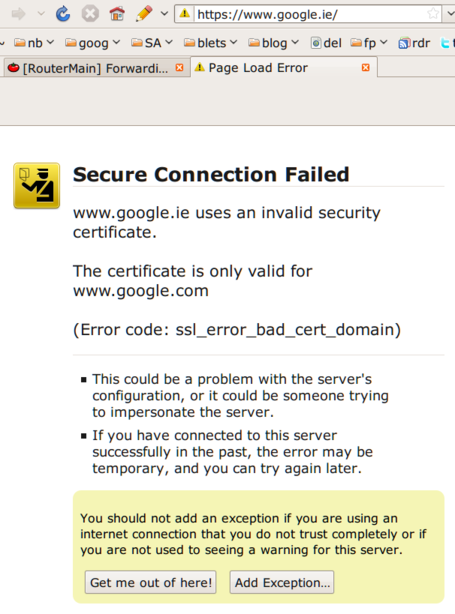

Check out what happens when you visit https://www.google.ie/ :

Clicking through Firefox’s ridiculous hoops gets me these dialogs:

Good work, Google and Firefox respectively!

Project Voldemort : ASL-licensed DHT from LinkedIn

(tags: dht apache hashtable voldemort linkedin java storage networking cloud scalability)Twitter leaked visitor usernames to third-party sites via Google Analytics : excellent research by Des Traynor. oops! the hole has since been patched

(tags: twitter privacy analytics tracking google des-traynor exploits vulnerabilities json javascript security)How to Brew – By John Palmer : book on home brewing, recommended by Glynn Foster

(tags: beer homebrew brewing hobbies project diy book toreadf)How to sync Google Calendar with KOrganizer : using GCalDaemon — this works very nicely. it’d be nicer if it was built into KOrganizer, but hey

(tags: gcaldaemon google google-calendar scheduling calendar kde korganizer howto apps pim)Interview with an Adware Author : great interview. ‘In your professional opinion, how can people avoid adware?’ ‘Um, run UNIX.’

(tags: adware windows blackhat bho malware viruses spyware vista xp)

Xarvester, the new Srizbi? : the evidence looks pretty convincing

(tags: srizbi xarvester marshal botnets anti-spam)Supervisor : ‘a client/server system that allows its users to monitor and control a number of processes on UNIX-like operating systems.’ looks quite sensible, BSD licensed, Python, works on Linux, Solaris, OSX and FreeBSD (via Conall)

(tags: via:conall supervisor daemon monitoring daemontools sysadmin management deployment service init unix linux)

A coworker today, returning from a couple of weeks holiday, bemoaned the quantities of spam he had to wade through. I mentioned a hack I often used in this situation, which was to discard the spam and download the 2 weeks of supposed-nonspam as a huge mbox, and rescan it all with spamassassin — since the intervening 2 weeks gave us plenty of time for the URLs to be blacklisted by URIBLs and IPs to be listed by DNSBLs, this generally results in better spamfilter accuracy, at least in terms of reducing false negatives (the "missed spam"). In other words, it gets rid of most of the remaining spam nicely.

Chatting about this, it occurred to us that it’d be easy enough to generalize this hack into something more widely useful by hooking up the Mail::IMAPClient CPAN module with Mail::SpamAssassin, and in fact, it’d be pretty likely that someone else would already have done so.

Sure enough, a search threw up this node on perlmonks.org, containing a script which did pretty much all that. Here’s a minor freshening: download

reassassinate – run SpamAssassin on an IMAP mailbox, then reupload

Usage: ./reassassinate –user jmason –host mail.example.com –inbox INBOX –junkfolder INBOX.crap

Runs SpamAssassin over all mail messages in an IMAP mailbox, skipping ones it’s processed before. It then reuploads the rewritten messages to two locations depending on whether they are spam or not; nonspam messages are simply re-saved to the original mailbox, spam messages are sent to the mailbox specified in "–junkfolder".

This is especially handy if some time passed since the mails were originally delivered, allowing more of the message contents of spam mails to be blacklisted by third-party DNSBLs and URIBLs in the meantime.

Prerequisites:

- Mail::IMAPClient

- Mail::SpamAssassin

Neil Fraser: Writing: Differential Synchronization : Impressive N-way sync algorithm for syncing text. ‘Differential synchronization offers scalability, fault-tolerance, and responsive collaborative editing across an unreliable network.’

(tags: research networking sync diff patch synchronization neil-fraser google development editing collaboration algorithms differential-synchronization)

McSweeney’s Internet Tendency: The Elements of Spam : ‘(Excerpts courtesy of William Strunk Jr., E.B. White, and Generouss Q. Factotum.)’

(tags: funny spam writing parody english mcsweeneys usage grammar spelling humour)A Warning About the Real Cost of Microformats : Gordon Luk: ‘I’m done with microformats. From now on, i’m either building separate developer tools and relationship, or i’m not’

(tags: html microformats web semweb gordon-luk xhtml)AWS Console : a nice AJAXy web-based GUI for EC2. awesome! Even a commandline weenie like myself can see how useful this is

(tags: ec2 aws amazon web gui ajax)

Map/Reduce and Queues for MySQL using Gearman : A talk by Eric Day and Brian Aker at the upcoming MySQL Conference in April: ‘[Gearman] development is now active again with an optimized rewrite in C, along with features such as persistent message queues, queue replication, improved statistics, and advanced job monitoring. For MySQL, there is also a new user defined function to run Gearman jobs, as well as the possibility to write your own aggregate UDFs using Gearman. This gives you the ability to run functions in separate processes, separate servers, and in other languages. The Gearman framework gives you a robust interface to also run these functions reliably in the “cloudâ€. This session will introduce these concepts and give examples of sample applications.’ Persistent queues (at last)? Gearman integration directly in the DB? excellent!

(tags: gearman queueing mysql databases brian-aker mapreduce sql conferences talks papers)

Twitter hack actually due to dictionary attack : see also http://blog.wired.com/27bstroke6/2009/01/professed-twitt.html . So, some more Twitter antipatterns: 1. user account with admin privileges, instead of role account; 2. admin account without two-factor auth; 3. no rate limits or other dictionary-attack defenses

(tags: twitter security webdev lessons antipatterns dictionary-attack accounts authorization authentication role-accounts two-factor-authentication rate-limiting via:simonw)Google’s Browser Security Handbook : by lcamtuf, a GOOG employee these days. comprehensive. ‘provide[s] web application developers, browser engineers, and information security researchers with a one-stop reference to key security properties of contemporary web browsers’

(tags: security web google http browsers javascript html reference lcamtuf via:aecolley webdev)Wiggle.co.uk : another option for online bike sales, tipped by Boards.ie denizens. no free shipping here though

(tags: shopping bikes uk cycling)how to install from .ISO in vmware server 2.0 : omg this is utterly idiotic. not impressed

(tags: vmware-server vmware iso installation ui confusing broken)BikeToWork – boards.ie Wiki : a good collection of additional factoids about the govt bike-to-work scheme

(tags: ireland cycling cycle-to-work bikes boards commuting tax)Rechargeable Battery Review AAA NiMH : same again, for AAA batteries this time (via IRR)

(tags: aaa batteries rechargeable via:irregulars recharging nimh electronics reviews testing)The Great Battery Shootout : rechargeable batteries put to the test (a few years ago at least). quick summary: Panasonic shite, Energizer 2300 good (via IRR)

(tags: via:irregulars batteries recharging rechargeable aaa aa electronics testing reviews nimh charger power)The cycle to work scheme : Green Party site on the new Cycle-to-Work scheme, whereby the govt will provide a tax exemption if your employer buys you a bike up to EUR1000 in value

(tags: greens green cycling work hr cycle-to-work tax commuting)

Closing the ‘Collapse Gap’: the USSR was better prepared for collapse than the US : I came across this a while back and have been looking for it again for a while. Good document on what happened in the former USSR after its society collapsed — pretty funny too. A bit heavy-handed in its criticism of the US though (via Bruce Sterling)

(tags: politics history usa government society russia ussr economy collapse sustainability orlov futurism america world)Moviestar.ie now fulfilled by Screenclick.com : Irish DVDs-by-mail market consolidation

(tags: screenclick moviestar.ie dvd film tv ireland business mergers)LINX Public Affairs – Cinema ratings and web sites : Good commentary on the absurdity of the UK govt’s attempts to impose age-rating certs on websites. ‘From a regulatory point of view, at least part of the Internet is more like a pub, football crowd or playground than it is like a TV programme.’

(tags: linx ratings censorship uk politics filtering web andy-burnham free-speech)

shouting at a disk can increase latency : funny anecdote. bookmarked mainly because of the nice SmokePing-like chart they’re using to chart I/O latency stats… wonder if I can apply similar dataviz for spamassassin’s rule-QA…

(tags: rule-qa spamassassin dataviz infoviz latency smokeping graphs charts storage audio shouting disks solaris funny)

Want to rent a house in Stoneybatter? : I’m letting out our house in the ‘batter; 2 bedrooms, 15 mins from town, cosy, great neighbourhood. A little bit of heaven in Dublin 7

(tags: stoneybatter ads housing rental dublin-7 d7 kirwan-st neighbourhood daft)

Want to rent a house in Stoneybatter? : I’m letting out our house in the ‘batter; 2 bedrooms, 15 mins from town, cosy, great neighbourhood. A little bit of heaven in Dublin 7

(tags: stoneybatter ads housing rental dublin-7 d7 kirwan-st neighbourhood daft)

The Ultimate Commodore 64 Talk @25C3 : in-depth exploration of the C=64, right down to the I/O bus, in a 64-minute presentation

(tags: hardware c=64 commodore-64 retrocomputing programming talks assembly 6502 6510 commodore via:vvatsa)Mozilla bug finds MITM attack in the wild : annoying Firefox “blah.foo.org uses an invalid security certificate” warnings cause user to open a bug at the Moz bugzilla, whereupon it is discovered that they are being haxx0red

(tags: mitm security firefox mozilla usability pki ssl man-in-the-middle)

The Ultimate Commodore 64 Talk @25C3 : in-depth exploration of the C=64, right down to the I/O bus, in a 64-minute presentation

(tags: hardware c=64 commodore-64 retrocomputing programming talks assembly 6502 6510 commodore via:vvatsa)Mozilla bug finds MITM attack in the wild : annoying Firefox “blah.foo.org uses an invalid security certificate” warnings cause user to open a bug at the Moz bugzilla, whereupon it is discovered that they are being haxx0red

(tags: mitm security firefox mozilla usability pki ssl man-in-the-middle)

major SSL/TLS cert vendor issued certificates without any verification whatsoever : ‘Five minutes later I was in the possession of a legitimate certificate issued to mozilla.com – no questions asked – no verification checks done – no control validation – no subscriber agreement presented, nothing.’ uh, massive FAIL

(tags: fail ssl tls security encryption comodo)np237: The session non-manager : GNOME’s current “stable” release, appearing in FC10 and Ubuntu 8.10, contains absolutely no session management, at all. wtf

(tags: ubuntu fedora gnome releases software wtf session-management x11)